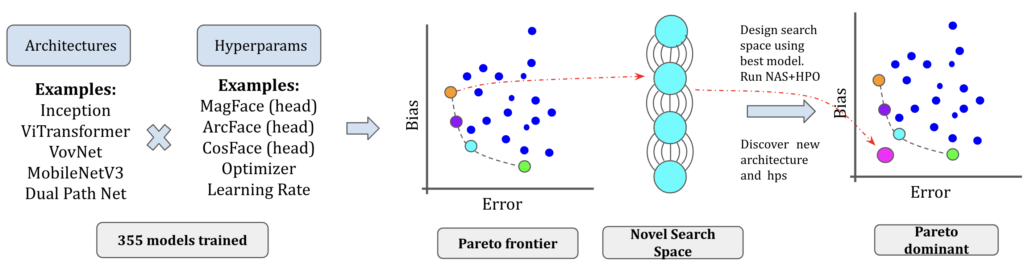

Deep learning is applied to a wide variety of socially-consequential domains, e.g., credit scoring, fraud detection, hiring decisions, criminal recidivism, loan repayment, and face recognition, with many of these applications impacting the lives of people more than ever — often in biased ways. Dozens of formal definitions of fairness have been proposed, and many algorithmic techniques have been developed for debiasing according to these definitions. Many de-biasing algorithms fit into one of three categories: pre-processing [1], in-processing [2], or post-processing [3]. Most of these bias mitigation strategies start by selecting a network architecture and set of hyperparameters which are optimal in terms of accuracy and then apply a mitigation strategy to reduce bias while minimally impacting accuracy. Contrary to this our paper posits that architectures and hyperparameters of the selected model are much more important and impactful than the mitigation strategy itself. Our large-scale empirical investigation on face-recognition datasets establishes this claim.

Toward Fair Face Recognition

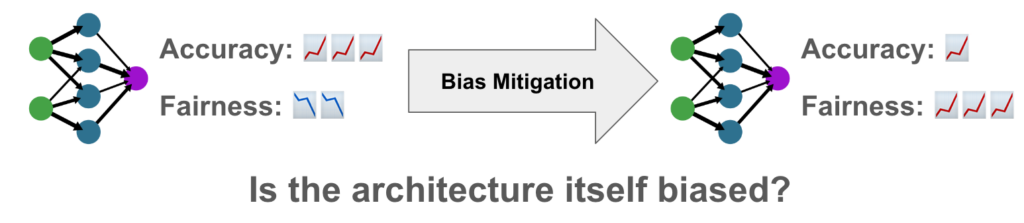

Conventional belief in the fairness community is that one should first find the highest performing model for a given problem and then apply a bias mitigation strategy. One starts with an existing model architecture and hyperparameters, and then adjusts model weights, learning procedures, or input data to make the model fairer using a pre-, post-, or in-processing bias mitigation technique. While these methods often improve the fairness metric, this often comes at the cost of drop in accuracy of the model. We pose a fundamental question to these bias-mitigation strategies: What if bias is inherent to the architecture design itself?

Large scale Analysis

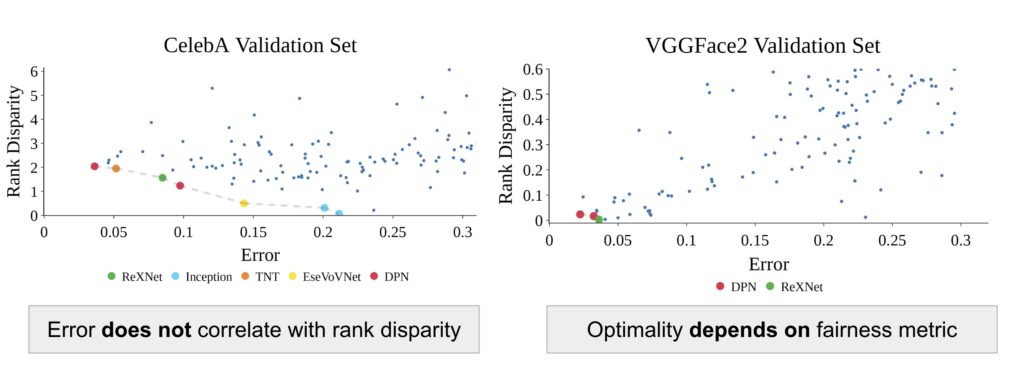

Our large scale analysis on a variety of pytorch image recognition models from timm [4] on the CelebA and VGGFace2 datasets reveals that error does not correlate well with fairness metrics. Moreover the optimality of an architecture often depends on the fairness metric of choice. Architectures have traditionally been designed to optimize for performance metrics like accuracy and our study shows that this might indeed be sub-optimal for fairness.

Joint NAS+HPO

We then construct a search space around the Dual Path Network [5], which is optimal in terms of accuracy on CelebA and VGGface2 through our analysis. We then use multi-objective, multi-fidelity bayesian optimization in SMAC3 [8] to automatically discover architectures and hyperparameters that are fairer than the handcrafted ones while being highly accurate on large-scale datasets like CelebA and VGGFace2.

CelebA

VGGFace2

Transfer to other protected attributes and datasets

Furthermore the architectures discovered are transferable to other face-recognition datasets with same and different protected attributes (eg: race in RFW [6]) with no further fine-tuning, while maintaining significant improvements over handcrafted architectures and hyperparameter choices.

Furthermore we also transfer our pre-trained models to the AgeDB [7] dataset which has age as a protected attribute. Our models outperform handcrafted architectures on this dataset too across accuracy and disparity metric.

We also transfer our models to 6 different face recognition datasets outperforming the handcrafted architectures on all of the datasets.

Linear Probing

But what makes these architectures fair? To answer this question we insert linear probes in the last two layers of the architectures discovered by SMAC and the handcrafted architectures. We find out that the SMAC architectures have much lower gender classification accuracy after probing, compared to handcrafted ones. This indicates that these architectures do not exploit gender as a protected attribute to make identity predictions, as desired.

TLDR: Architectures and hyperparameters have a big influence on fairness; exploit that knowledge to discover better architectures from ground up!

We expect the future work in this direction to focus on studying different multi-objective algorithms and NAS techniques to search for inherently fairer models more efficiently. Further, it would be interesting to study how the properties of the architectures discovered translate across different demographics and populations. Another potential direction of future is including priors and beliefs about fairness in the society from experts to further improve and aid NAS+HPO methods for fairness by integrating expert knowledge.

Check out the details of our paper and code below:

Paper: Rethinking Bias Mitigation: Fairer Architectures Make for Fairer Face Recognition.

Code: https://github.com/dooleys/fr-nas

References

[1] Feldman, M., Friedler, S.A., Moeller, J., Scheidegger, C. and Venkatasubramanian, S., 2015, August. Certifying and removing disparate impact. In proceedings of the 21th ACM SIGKDD international conference on knowledge discovery and data mining (pp. 259-268).

[2] Zafar, U., Ghafoor, M., Zia, T., Ahmed, G., Latif, A., Malik, K.R. and Sharif, A.M., 2019. Face recognition with Bayesian convolutional networks for robust surveillance systems. EURASIP Journal on Image and Video Processing, 2019, pp.1-10.

[3] Hardt, M., Price, E. and Srebro, N., 2016. Equality of opportunity in supervised learning. Advances in neural information processing systems, 29.

[4] https://github.com/huggingface/pytorch-image-models/tree/main/timm

[5] Chen, Y., Li, J., Xiao, H., Jin, X., Yan, S. and Feng, J., 2017. Dual path networks. Advances in neural information processing systems, 30.

[6] Wang, M., Deng, W., Hu, J., Tao, X. and Huang, Y., 2019. Racial faces in the wild: Reducing racial bias by information maximization adaptation network. In Proceedings of the ieee/cvf international conference on computer vision (pp. 692-702).

[7] Moschoglou, S., Papaioannou, A., Sagonas, C., Deng, J., Kotsia, I. and Zafeiriou, S., 2017. Agedb: the first manually collected, in-the-wild age database. In proceedings of the IEEE conference on computer vision and pattern recognition workshops (pp. 51-59).

[8] Lindauer, M., Eggensperger, K., Feurer, M., Biedenkapp, A., Deng, D., Benjamins, C., Ruhkopf, T., Sass, R. and Hutter, F., 2022. SMAC3: A versatile Bayesian optimization package for hyperparameter optimization. The Journal of Machine Learning Research, 23(1), pp.2475-2483.