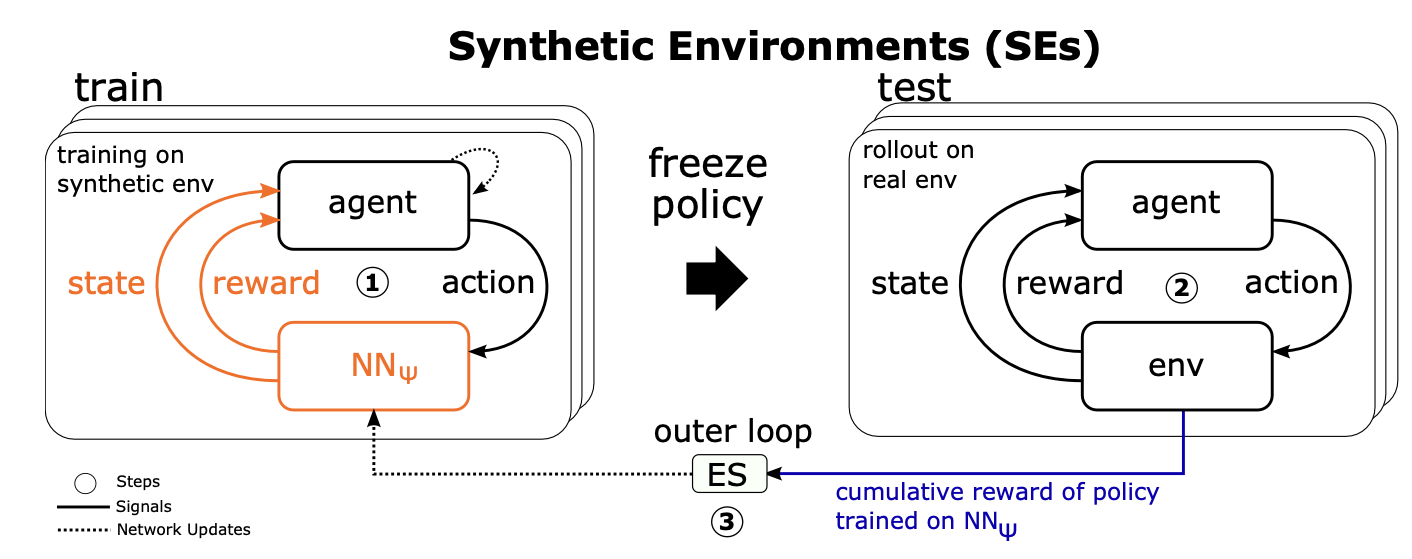

Carolin Benjamins, Theresa Eimer, Frederik Schubert, Aditya Mohan, Sebastian Döhler, André Biedenkapp, Bodo Rosenhahn, Frank Hutter and Marius Lindauer TLDR: We can model and investigate generalization in RL with contextual RL and our benchmark library CARL. In theory, without adding context we cannot achieve optimal performance and in the experiments we saw that using context […]

Contextualize Me – The Case for Context in Reinforcement Learning

Posted on June 5, 2023 by Theresa Eimer